Using a Data Catalog as an early alignment and design tool for your Master & Reference Data Management programs will drive faster, better and (often) cheaper results.

Master Data Management Challenges

If you’ve ever tried to stand up a Master Data Management (MDM) program in an enterprise, no doubt you’ve quickly realized how challenging mastering your data can be. While MDM has been around for several decades, its track record of success has been less than stellar.

So what makes mastering MDM a challenge? There are a number of factors that drive success. Establishing domain scope is difficult and is often one of the first stumbling blocks:

- Should you master all data or only some?

- Which domains drive the most value?

- Who should choose?

This often leads to another challenge: How should leadership align on business priorities as they relate to master domains? Business epics such as “Customer 360” often create complex data environments, as an example. This can then result in surfacing technical challenges, such as integration, access to data, and of course the inability to apply MDM driven changes back to the source systems. Even with the best planning, however, many programs may also fail due, in part, to data culture issues. Don’t underestimate how conflicts around ownership, decision rights, and even lack of understanding of the enterprise data can derail progress.

As you may have gathered by now, establishing an MDM program requires some thoughtfulness. The goals of MDM may vary, but a healthy program must ensure that the business has complete, consistent, current, and authoritative master and reference data across all organizational processes. This means that master data should be shared across enterprise functions and applications. Not only will this lower operating costs, but it will reduce the complexity of data usage and integration through standards, common data models, and integration patterns. In order to achieve this, organizations need to analyze data and supporting metadata, provide feedback to source systems, and use input to tune and improve the rules engines driving the MDM solution.

So how should your organization best support the master data lifecycle? Make the case for the data catalog as the catalyst to help MDM teams better master not only their data, but their program as well.

Assessing the Data Landscape

Getting off on the right foot for your MDM program can be tricky for the reasons discussed earlier. Consider your project as you might a great paint job on your house or a car: It’s all in the prep work.

So how should data leaders prepare for a successful MDM initiative? Most practitioners agree that matching and merging are core concepts of MDM. Managing master record IDs (as part of the mastering process) is another key prep step leaders should prioritize. These pieces ensure survivorship, which is a core process used to drive duplicate records of an entity into a single master view.

How can you determine the most trusted data assets to master? And how do you ensure your MDM program improves with time? While most MDM tools provide an execution framework for these work streams, they often lack the capability to drive early analysis and discovery. Most MDM tools provide robust execution engines for processing these rules, but often fall short when it comes to defining a starting point or, subsequently, driving continuous process improvements post Golden Record. We’re talking about stewardship here, which is critical to orchestrate the mastering lifecycle. Stewardship is also the needed human touch to address data that falls out of the mastering process. In other words, MDM programs need continuous process improvement if you’re to address why records fall out in the first place.

Just like data quality, and governance — stewardship is key. Why? MDM architects suffer from the same pains that impact business analysts, data science teams, and report writers: How to find, understand and trust the data. Your organization’s data is likely stored in different ways to meet the different needs. Data lives in the form of tables in relational databases, data lakes or warehouses, or even ERP or RDM systems. In a sense, one could say that data lives in the neighborhoods it works in. Cataloging these sources in advance provides a significant leg up to understanding the scope, as well as providing early insight to the complexity of the data or any sprawl.

By connecting the MDM program to stewardship and design techniques provided by the data catalog (search, metadata curation and collaboration), teams are better able to drive effective design and review of mastering rules and models. The right data catalog should provide significant collaborative and structured workflow capability to empower MDM architects and business analysts as they work to classify data (domain and otherwise). The right platform will also help teams leverage stewardship, governance, and change management workflows for any number of MDM design and documentation efforts.

Entity Resolution is Foundational

Duplicate records are a common challenge for any data-driven business. Entity resolution describes the technique of identifying these records across data sources and linking them together. It is a critically acclaimed mastering technique that will help form a solid foundation from which any program can grow. The concept was developed by John R. Talburt, PhD. I first learned of Dr. Talburt’s approach to entity resolution while I was working on the second edition of DAMA’s Data Management Body of Knowledge. Some years later I was fortunate to work with John on an MDM project we were both consulting. As he lays out in his book, Entity Resolution and Information Quality, entity resolution is about determining when references to real-world entities are equivalent (refer to the same entity) or not equivalent (refer to different entities).

In its broadest sense, entity resolution encompasses five major activities:

- Entity reference extraction,

- Entity reference preparation,

- Entity reference resolution,

- Entity identity management, and

- Entity relationship analysis.

Data leaders typically rely on an enterprise data catalog to develop a deep understanding of entities while documenting the life cycle correlation of their landscape. As a metadata management platform, a data catalog is the ideal environment for understanding and reconciling disparate assets with an eye to how and why they were created.

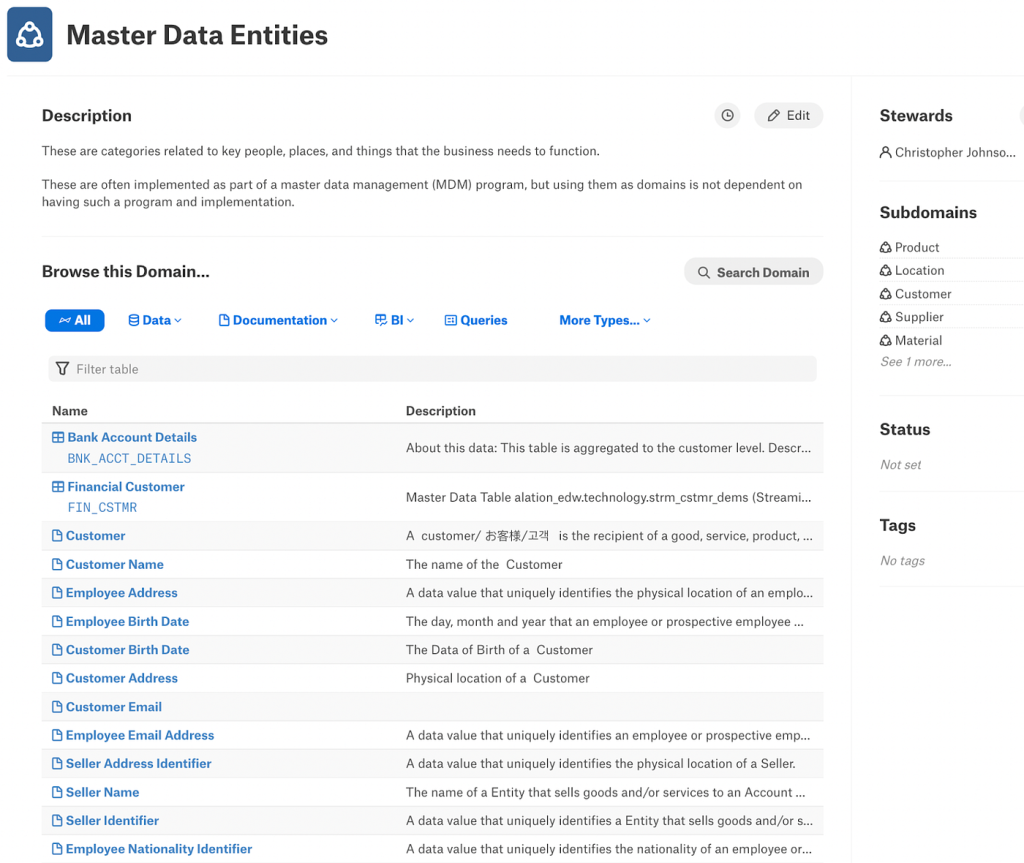

Alation’s Data Catalog leverages domains

For example, best practice defines the importance of appending common identifiers to reference instances, as this helps to denote the decision that they are equivalent. (Talburt, 2011)

This may manifest as the creation of metadata that describes the structure and process of that common identifier structure, such as master_id, match_id, merge_file_id attributes, and how they get created or populated. It’s an excellent example of how metadata can drive identity resolution, record linking, record matching, record deduplication, merge-purge, and a number of entity analytics. In this way, leaders can add continuous integration and continuous delivery (CICD) to their MDM strategy.

Yet to make metadata actionable in this way you’ll need a data catalog. When seeking an MDM-friendly catalog, leaders should look for features like articles, tags, custom fields, and catalog sets. They should also ensure that decisions made in the resolution process can be recorded in the catalog for posterity. That way, when future questions arise, answers can be easily referenced and communicated to stakeholders across the organization.

Don’t Forget Reference Data

The concepts layed out here also apply to reference data management. While often less complex, reference data management programs also benefit from the power of a data catalog.

“Reference Data is any data used to characterize or classify other data, or to relate data to information external to an organization” (Chisholm, 2001)

Typical reference data may consist of codes and descriptions, but may be more complex and incorporate cross-functional relationship metadata. Most often, however, reference sources will focus on classification and categorization such as statuses or types (e.g., Order Status: New, In Progress, Closed, Canceled). They may also include external information such as geographic or standards information (e.g., Country Code: DE, US, TR). The moral of this section is that if you’re not cataloging your reference data, you should be.

Defining Rules for Mastering Golden Records

If done well, MDM programs will also drive improvements in metadata, usually by way of quality assessments. A data catalog can assist MDM teams by driving clarification and definitions along with the visual inspection of attributes. Here again, it’s critical to document and collaborate on design thinking for rule development, testing, and ultimately the governance of downstream execution of match, merge and survivorship rules that drive the actual execution through MDM tools.

While data catalogs are not replacements for an MDM execution platform, some catalogs do provide considerable out of the box functionality to help MDM designers and architects design or evaluate rules. Using the data catalog to apply bulk classification and categorization will help to scope the tooling and execution requirements. It’s also another opportunity to document these decisions.

Additionally, data stewards will need to collaborate with business teams on reconciliation and agreement as it relates to rules impacting entity names and enterprise level definitions. As teams are able to find, understand, and rationalize data elements and attributes, they also begin to understand the quality of the data. Data quality problems will complicate an MDM project, so the assessment process should address root causes of data issues. A data catalog that offers a suite of stewardship tools will empower your stewards and other key stakeholders to assess source quality and suitability of data for a master data environment alongside critical context.

Data Models and Dictionaries Inform Source Analysis

Another critical tool in the MDM architect’s tool belt will be the data model. While catalogs can support legacy data model visualizations such as entity relation diagrams, architects will benefit from the fact that source data when using the Alation data catalog, for example, models are automatically created and associated (along with supporting metadata) via ontologies. These models allow users to browse and understand both logical and physical model structure using natural language titling and descriptions. The catalog example about automates the creation of these ontological data models as it extracts metadata from sources. To further assist the MDM team, natural language enhancements are applied through a combination of machine learning and human stewardship.

When MDM programs catalog their sources in advance of the mastering, MDM architects are able to leverage data dictionaries that summarize these natural titles and descriptions in addition to visualizing the physical properties. This includes custom field values and child data hierarchies as well. The dictionary will also include curated data source information that is added to the catalog by stewards and users. It cannot be overstated how critical this metadata is to driving effective mastering discussions.

Data Catalogs — Your MDM Whiteboard

Manage what matters. It’s foundational to effective data literacy and trust. But it certainly applies to a master or referencedata management program — it’s easy to over-index on scope when designing an MDM or RDM program. Leverage your data catalog to ‘right-size’ your efforts. It’s just good advice to start small and grow at a managed pace. This will ensure you don’t bite off more than you can chew. To the extent that teams are able to evaluate and assess data sources in preparation for scoping and designing of the Master Data Management program, the catalog as a ‘single pane of glass’ will give them lift.

For an MDM team, it’s imperative to know what data lives where and why. The data catalog can be a critical tool to help people understand the structure and content of operational and reference data and the processes through which it is created, aggregated or reported on.

Think of the catalog as a metadata whiteboard. It’s an excellent platform to help the MDM team with early design thinking relative to scope and approach, which incidentally will also document the process as you go. The catalog will help lay a great foundation for your Master Data Management project. In addition, it’s a wonderful place to anchor enterprise stewardship to the program as well.

#DataCulture #EnterpriseReadiness #MasterDataManagement #referencedata #dataarchitecture #enterprisearchitect